This website uses cookies. By clicking Accept, you consent to the use of cookies. Click Here to learn more about how we use cookies.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Extreme Networks

- Community List

- Switching & Routing

- ExtremeSwitching (VSP/Fabric Engine)

- vsp 7200 monitor-by-isid

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

vsp 7200 monitor-by-isid

vsp 7200 monitor-by-isid

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

09-25-2018 03:45 PM

Running version 6.1.x at the moment on 8 x VSP 7200.

In a Nutshell:

Im testing an IDS solution and configured a monitor-by-isid (with QoS = 3) to copy traffic across the SPB cloud to the IDS. We noticed a massive increase (1ms to 300ms) in ping latency across the core. The config was running what seems to be fine for a few days/weeks before this incident. Unplugging the IDS seems to restore normality.

I have a suspicion that I might be oversubscribing the core or egress interface, since its ((4 x 10GbE to a single 10GbE egress) x 4) , Mirror Interfaces are normally around 20% utilized, but who knows!? )

I do not see any indication of loss of packets on the interface side

show khi performance buffer-pool

show khi performance cpu

show khi performance memory

show khi performance process

show khi performance pthread

show khi performance slabinfo

show khi cpp port-statistics

show qos qosq-stats cpu-port

All of these output(s) look normal - as far as I can see since the dates of Hi's does not correspond with the recent impact -

I thought it might be memory or buffers running low causing this but cannot see it any indication in the stats.

My Question:

Is there some other parameters I can watch to see drops or performance impact on the mirroring/monitoring side?

Any tips?

Other than this, the SPB core is super stable

Disclaimer - This is my first post. Apologies if I missed or got something wrong.

In a Nutshell:

Im testing an IDS solution and configured a monitor-by-isid (with QoS = 3) to copy traffic across the SPB cloud to the IDS. We noticed a massive increase (1ms to 300ms) in ping latency across the core. The config was running what seems to be fine for a few days/weeks before this incident. Unplugging the IDS seems to restore normality.

I have a suspicion that I might be oversubscribing the core or egress interface, since its ((4 x 10GbE to a single 10GbE egress) x 4) , Mirror Interfaces are normally around 20% utilized, but who knows!? )

I do not see any indication of loss of packets on the interface side

show khi performance buffer-pool

show khi performance cpu

show khi performance memory

show khi performance process

show khi performance pthread

show khi performance slabinfo

show khi cpp port-statistics

show qos qosq-stats cpu-port

All of these output(s) look normal - as far as I can see since the dates of Hi's does not correspond with the recent impact -

I thought it might be memory or buffers running low causing this but cannot see it any indication in the stats.

My Question:

Is there some other parameters I can watch to see drops or performance impact on the mirroring/monitoring side?

Any tips?

Other than this, the SPB core is super stable

Disclaimer - This is my first post. Apologies if I missed or got something wrong.

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

10-22-2018 05:29 PM

I have been running Fabric RSPAN now across the VSP7200 core for about a month without any problems. Key was to set a low QoS so as not to interfere with production traffic.

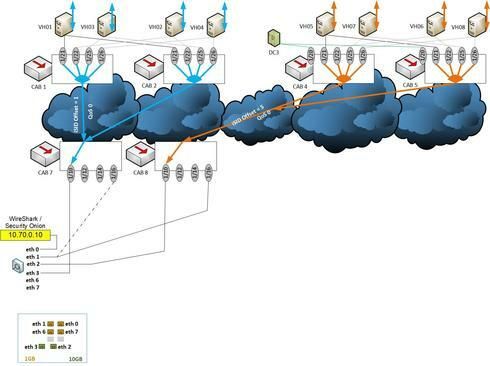

Also, I split the monitor ports across two end switches (lower two in diagram), All these are 10GbE ports and set to mirror Rx and Tx.

Understandably, when mirroring 8 x 10Gb ports to a single 10Gb port, one can expect some packet loss in the mirrored traffic. Overall with this enabled in production, I did notice a small sluggish-ness in general for the VMs and applications.

I guess that the 40Gbps links between the ToR switches, and only two Backbone i-Sids, was congested - though I did not search for evidence in performance counters.

Overall, very happy with what was achieved.

Also, I split the monitor ports across two end switches (lower two in diagram), All these are 10GbE ports and set to mirror Rx and Tx.

Understandably, when mirroring 8 x 10Gb ports to a single 10Gb port, one can expect some packet loss in the mirrored traffic. Overall with this enabled in production, I did notice a small sluggish-ness in general for the VMs and applications.

I guess that the 40Gbps links between the ToR switches, and only two Backbone i-Sids, was congested - though I did not search for evidence in performance counters.

Overall, very happy with what was achieved.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

10-16-2018 02:17 PM

Hello,

I do not have an experience with Fabric RSPAN but I remember we had serious stability issues with VSP 9000 when we were doing long term port mirroring to the hardware probe. It took us days until we found out what is causing problems. On the other hand I have never seen such a behaviour on VSP 8000.

I do not have an experience with Fabric RSPAN but I remember we had serious stability issues with VSP 9000 when we were doing long term port mirroring to the hardware probe. It took us days until we found out what is causing problems. On the other hand I have never seen such a behaviour on VSP 8000.