- Extreme Networks

- Community List

- Switching & Routing

- ExtremeSwitching (VSP/Fabric Engine)

- ESXi host LAG to VOSS vIST cluster

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ESXi host LAG to VOSS vIST cluster

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-21-2023 06:47 AM - edited 11-21-2023 06:48 AM

Hello,

I am trying to setup a 2 NICs LAG from ESXi host to vIST cluster based on two 5520 units running Fabric Engine.

At first I was trying to setup LACP enabled SMLT connection to ESXi with flex-uni config, but I still got "Churn" error in logs and MLT was in NORM, not SMLT mode.

Then I've found an article saying that EXSi supports LACP only with vDS virtual switches, which is not true in my case.

I have other SMLT links with LACP enabled on that cluster, which are working fine.

Is it any way to setup LAG to ESXi host from SMLTed VOSS cluster? Should the SMLT be set without LACP enabled? Is there anyone with working config like this?

REGARDS

Robert

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-21-2023 12:24 PM

Why are you using FLI-UNI's. This is a DVR deployment?

If you aren't using DvR just use normal UNI's.

ANyways. This is 100% supported in VOSS. Here are two examples.

Here is KB article. https://extreme-networks.my.site.com/ExtrArticleDetail?an=000083501&q=VOSS%20SMLT%20LACP%20confog

There is a working example in this TCG.

https://documentation.extremenetworks.com/TCG-TSG/VSP_CCTV_Deployment.pdf

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-30-2023 06:10 AM

Hi everyone,

I finally got it working. I just did configuration once again, shuting down/up member interfaces, creating MLT again with no LACP, with SMLT and with flex-uni enabled.

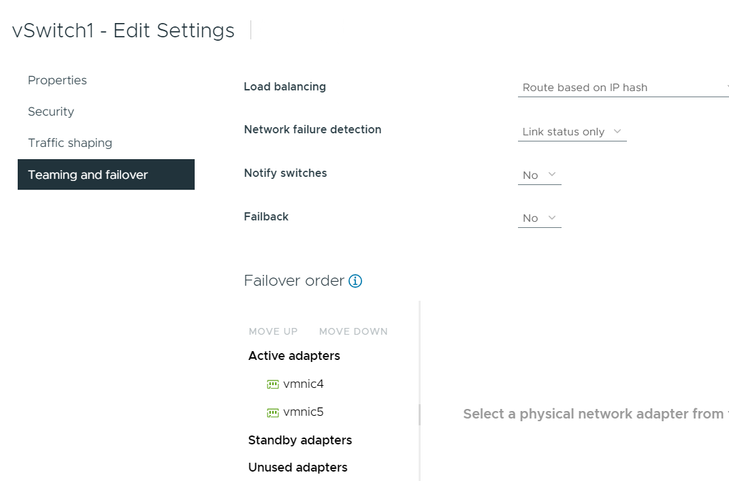

On the ESXi side vSwitch setting was as follows:

Thank you guys for your support!

BR, Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-29-2023 12:19 AM

Hello,

Things I would check for troubleshooting.

- Are the I-SID and VLAN ID's correct (the same as for the L3 vlan on the (for that vlan) routing switch) and is the VLAN tagged assigned to the flex-uni interfaces.

- Are the ESX MAC addresses learned in the correct vlan.

- Have the MLT/SMLT interfaces the same configuration on both cluster members, e.g. same SMLT id., same flex-uni vlan's assigned, ...

- The ESX vSwitch must have the same vlan's tagged as the SMLT cluster.

- If required, there can be ONE vlan assigned UN-tagged (a so called native/default/... vlan) on the ESX and SMLT cluster flex-uni interfaces.

regards

WillyHe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-22-2023 06:35 AM

A vIST cluster allows to connect systems and other network devices as

- Dynamic LAG (LACP)

- Static LAG (depending on manufacturer called MLT/DMLT/SMLT for Extreme VOSS/Fabric Engine devices, Ether-channel, LACP static, ...)

I read that a dynamic LACP connection is working OK so i do not provide an example configuration for that.

This is a static SMLT configuration example:

The same MLT ID must be used on both vIST cluster members.

Create MLT

mlt 139 enable name "Static_LAG"

mlt 139 member 1/39,1/41

mlt 139 encapsulation dot1q (or not if un-tagged)

Enable SMLT

interface mlt 139

smlt

exit

Add VLAN(s)

vlan mlt 2202 139

Notice a difference between LACP and static MLT configuration, for LACP the vlan's are assigned to the port(s), for a static MLT the vlan's can also be assigned to the MLT.

Behavior difference between LACP LAG and static LAG (applicable for all vendors).

- In LACP LAG each connection is negotiated between the two partners and when agreed the link becomes a member of the LACP group.

In LACP there is a kind of identity check build in to assure the connected ports are on the same device and the same LACP group. - In Static LAG when a port is up it becomes active in the LAG, also when the ports are connected to different devices.

When connecting EXTREME switches to each other, vlacp is preferably used to have the same behavior as the LACP "identity" check.

regards

WillyHe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-22-2023 05:54 AM

I normally like to use LACP LAG to ESXi hosts but the server people always complain about how expensive the licenses for that are. When reverting to VMware's own load balancing (per VM etc.), each connection to the server is treated as just a normal VLAN trunk (or even untagged if you happen to have have only one VLAN). VMware will distribute the VMs across those links in it's own way. The return traffic needs to go via the same link that the VM is connected to, which is learnt by the switch as soon as the VM "speaks". A VM move to another host or link is seen by the switch as soon as the VM sends the next frame. The downside here is that if your SMLT pair is fed by another SMLT par in the core, the ISC/ISL link can get heavily loaded. This is avoided when you connect the VMware servers with a proper LAG.

So, if your VMware servers use their own load balancing, forget MLT/SMLT on your switches for the ports facing the hosts and configure normal VLAN trunks.