- Extreme Networks

- Community List

- Switching & Routing

- ExtremeSwitching (EXOS/Switch Engine)

- VRRP best practices, preempt, tracking, fabric rou...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

VRRP best practices, preempt, tracking, fabric routing, accept-mode, host mobility

VRRP best practices, preempt, tracking, fabric routing, accept-mode, host mobility

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

12-03-2017 07:12 AM

Hi There,

Apologies for this question being a little long....

Just looking into the communities thoughts around some best practices around configuring VRRP.

Preempt

By default the preempt delay is 0 seconds and the preempt to master would therefore be 3 hello's, which are sent every 1 sec. So my question is would a 3 second preempt be deemed sufficient? I've seen some set to 90 seconds, the logic for that is giving the network a chance to stabilise before going to master to stop flapping. Is there a formula you could use, what if you have more than 2 routers in the VRID.

This article with VRRP and FREB shows a prempt delay of 5 seconds:

https://extremeportal.force.com/ExtrArticleDetail?an=000080659

Accept Mode

In EOS I have in the past turned accept-mode on so that you are able to ping the VRRP VIP address, but in EXOS you do not need to do this. So wondering what other practical / best practice reasons there would be for turning it in. One example might be to support NTP over the VIP as per the following GTAC article:

https://extremeportal.force.com/ExtrArticleDetail?an=000081389

Fabric Routing

This was mentioned above, but given its own heading for comment. In that example preempt delay was set to 5 seconds, so just wondering if the inclusion of fabric routing, and even the number of participating routers in the same VRID should be something to consider?

Tracking

VRRP can be tracked via pings, IP routes and VLANs. So there is probably some obvious aspects of when that might be a good idea, but interested in some practical examples and / or best practices. As as an example the GTAC case below shows how to configure VLAN tacking if a VLAN fails so that it will failover to the other one, which sounds great but could that be considered good practice to do that on every VLAN?

https://extremeportal.force.com/ExtrArticleDetail?an=000061651

Host Mobility

An explanation for this is given here:

http://documentation.extremenetworks.com/exos/EXOS_21_1/VRRP/c_vrrp-host-mobility.shtml

I can see this possibly making sense when using fabric routing mode and when multiple routers are in the same VRID. In fabric routing mode with MLAG my perception would be that traffic could end up at any switch in the MLAG pair, determined by the hashing algorithm configured on the LAG and then be routed from there. Both routers would essentially be advertising the same subnet so asymmetric routing could take place as traffic could land back at the other router (other switch in MLAG pair). Whether that actually matters though I don't think, because the switch would see the device directly attached through the other link in the LAG and therefore directly forward the request onto the client instead of passing it back to the originating router.

Interested in your thoughts.

Many thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

06-27-2018 07:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

01-24-2018 01:29 PM

Thanks for taking the time to look at this closely.

The OSPF link between the MLAG pair (across ISC /30 point-to-point) is already in place, and currently there is no preempt, but good to hear your feedback as I wasn't too sure whether I should add it... I did, for testing but made no difference.

The pinging is taking place off a PC that is attached to a stack that is hanging off one MLAG pair to a PC off a stack attached to the other Core MLAG pair. When pinging to different places the drops seem to be taking place between these MLAG pairs.

Had considered moving the DR and splitting that, and the BDR between different MLAG pairs but couldn't make sense of why that would benefit - although that's an absolutely worthwhile thing to try, so will follow your guidance and let you know the outcome.

Will play with the MLAG restore delay also, depending on results.

Many thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

01-20-2018 05:07 PM

although you have provided lots of info, I cannot positively determine if the problem is related to VRRP forwarding starting too early on a booting switch. From where are you sending the ping packets that are lost? Do they need to be routed across the the two tier MLAG between the four switches? If yes, then this may well be missing routing information.

You can try enabling the MLAG restore delay (it is disabled by default, the default time if just enabled is 30s). You might want to add a point-to-point OSPF transfer VLAN/network between the MLAG peers using the same link as the ISC VLAN to give the routing process time to fill the FIB before MLAG ports are enabled and traffic is received on the booting switch. Please note that this can only help if both source and destination of the ping packets are connected via MLAG ports.

For a general solution active/passive VRRP with preempt delay (do not use this with MLAG!) or a forwarding delay for fabric mode VRRP backup routers is needed. The latter is not documented to exist in EXOS or EOS.

BTW, it might be good to have the DR on one MLAG pair and the BDR on the other, but I'm not sure, this is just a hunch. You can configure OSPF priorities to achieve this.

Thanks,

Erik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

01-07-2018 10:29 PM

Was meant to say thanks for posting....

Interestingly I am currently experiencing an issue that I think Erik is describing. Was running version 21.1 and now 22.4.

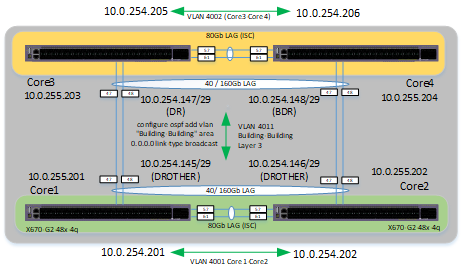

Here is a very high level overview of the network:

If Core 1 is rebooted whilst pinging Core 3 & Core 4 there is 7 dropped pings before service is restored, but pinging a device that hangs off Core 1 & Core 2, say a LAG'ed Switch or a PC hanging off it, its fine.

If Core 2 is rebooted for exactly the same scenario only one ping is dropped in each case.

Below are some observations I made:

Core 1 powered off, the show ospf is the neighbour table before power cycle (need to capture one during the process)

Farrer-Core2.1 # show ospf neighbour

Neighbor ID Pri State Up/Dead Time Address Interface

BFD Session State

==========================================================================================

10.0.255.201 1 2WAY /DROTHER 00:02:43:15/00:00:00:04 10.0.254.145 Building-Building Goes Down

10.0.255.203 1 FULL /DR 00:02:43:15/00:00:00:05 10.0.254.147 Building-Building

10.0.255.204 1 FULL /BDR 00:02:43:20/00:00:00:00 10.0.254.148 Building-Building

10.0.255.201 0 FULL /DROTHER 00:02:43:14/00:00:00:04 10.0.254.201 Core1-Core2 Goes Down

01/06/2018 15:20:36.66

01/06/2018 15:20:36.66

Core 2 powered off, the show ospf is the neighbour table before power cycle (need to capture one during the process)

Farrer-Core1.1 # show ospf neighbor

Neighbor ID Pri State Up/Dead Time Address Interface

BFD Session State

==========================================================================================

10.0.255.202 1 2WAY /DROTHER 00:02:45:52/00:00:00:01 10.0.254.146 Building-Building Goes Down

10.0.255.204 1 FULL /BDR 00:02:52:17/00:00:00:07 10.0.254.148 Building-Building New Adjacency

10.0.255.203 1 FULL /DR 00:02:52:21/00:00:00:02 10.0.254.147 Building-Building

10.0.255.202 0 FULL /DROTHER 00:02:45:51/00:00:00:01 10.0.254.202 Core1-Core2 Goes Down

Farrer-Core1.3 # show log

01/06/2018 15:27:04.30

01/06/2018 15:27:04.30

01/06/2018 15:27:04.30

01/06/2018 15:26:59.30

01/06/2018 15:26:58.30

01/06/2018 15:26:58.30

Each core pair is running MLAG, as shown by the ISC link. The two cores are connected via a common LAG, and OSPF broadcast is configured between them with Core 1 as the DR and Core 2 the BDR.

VRRP is configured so that there is a common VRID between all four switches, for each of the common VLANs that exist between them all. There is also a VRID for VLANs that only exit on one pair, and another VRID for the VLANs that exist on the other. Fabric routing mode is enabled on all the VRRP instances.

What I think I am seeing is what Erik is describing, in that there is some delay, perhaps related to learning new routes (in relation to OSPF) before things normalise and what is causing this 7 second delay.

Pretty sure the same thing is happing when doing the same test on Core 3 and Core 4.

1) If this is indeed what's happening, do you know what I can do about it?

2) Could I adjust the restore timer?

3) I haven't looked it up, but what is that restore timer default time?

Many thanks in advance