- Extreme Networks

- Community List

- Switching & Routing

- ExtremeSwitching (EXOS/Switch Engine)

- ipv4Adj delete in progress never completes

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ipv4Adj delete in progress never completes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

08-19-2021 07:04 AM

We’ve had a re-occurring issue come up where a P2P OSPF session within an area (with virtual link) continually transitions. It appears EXOS is not deleting the interface properly so the v4 adjacency is in a stuck state causing remote VPWS LDP-LSPs to fail establishing if traversing this P2P path.

(x870-32c)

pe1 = 10.6.0.42/30 (10.6.2.5)

pe2 = 10.6.0.41/30 (10.6.3.1)

triggered interface deletion not deleting:

pe1.1 # debug hal show ipv4Adj

HAL IPv4 Adjacencies:

VrId Gateway MAC Intf RCnt Flags

================================================================

...removed other entries...

2 010.006.000.041 00:04:96:9E:1D:A0 7 2 RS-------

2 010.006.000.042 00:00:00:00:00:00 0 1 --D-L----

...removed other entries...

IP Adj Flags: R = Resolved, S = Setup in hdw, D = Delete in progress

I = Invalidated, L = Local (direct), B = Blackhole

H = Hash Table, E = Extended Hash, P = LPM Table

p = PBR, F = Flow Redirect, T = Tunnel, X = VXLAN tunnel

We originally believed the interface deletion trigger to be BFD related and was also recommended to move to latest release, however even after upgrading both devices to latest firmware 31.3.1.3 and disabling BFD on this particular link the issue re-occurs.

We aren’t sure what the trigger is and why the P2P IP gets in this delete state and never resolves itself (we’ve previously created another vlan then shifted traffic and new IPs seem to work for some time but this is not a permanent solution). It seems to trigger when either of these pe’s have a flap on their area 0 P2P paths to another area.

VPLS/MPLS is unaffected, P2P OSPF session never goes down

pe1.2 # show log | include ospf

08/19/2021 13:52:54.46 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 10.6.0.41 to state = DOWN due to inactivity timer expiry.

08/19/2021 13:52:15.24 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 10.6.0.41 to state = EX_START due to two way event.

08/19/2021 13:52:15.24 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 10.6.0.41 to state = INIT due to hello received.

08/19/2021 13:52:15.24 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 0.0.0.0 to state = DOWN due to new neighbor.

08/19/2021 13:34:04.38 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 10.6.0.41 to state = DOWN due to inactivity timer expiry.

08/19/2021 13:33:25.16 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 10.6.0.41 to state = EX_START due to two way event.

08/19/2021 13:33:25.16 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 10.6.0.41 to state = INIT due to hello received.

08/19/2021 13:33:25.16 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.3.1 ipa 0.0.0.0 to state = DOWN due to new neighbor.

pe1.3 # show ospf neighbor pe1_to_pe2

Neighbor ID Pri State Up/Dead Time Address Interface

BFD Session State

==========================================================================================

10.6.3.1 1 FULL /DROTHER 38:14:10:08/00:00:00:05 10.6.0.41 pe1_to_pe2

NoneTotal number of neighbors: 1 (All neighbors in Full state)

pe2, you can’t P2P ping to pe1, mpls pings are fine:

pe2.1 # ping 10.6.0.42

Ping(ICMP) 10.6.0.42: 4 packets, 8 data bytes, interval 1 second(s).

Request timed out

Request timed out

^Cpe2.2 # ping mpls lsp prefix 10.6.2.5/32

Ping(MPLS) : 4 packets, 8 data bytes, interval 1 second(s).

48 bytes from 10.6.2.5: mpls_seq=0 ttl=128 time=0.876 ms

48 bytes from 10.6.2.5: mpls_seq=1 ttl=128 time=0.831 ms

^C

pe2 ospf transitions due to expiry of hellos because no p2p reply due to the deletion in progress?

pe2.3 # show log | in ospf

08/19/2021 14:52:11.73 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = INIT due to one way event.

08/19/2021 14:51:24.51 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = EX_START due to two way event.

08/19/2021 14:33:21.67 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = INIT due to one way event.

08/19/2021 14:32:34.43 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = EX_START due to two way event.

08/19/2021 14:16:41.58 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = INIT due to one way event.

08/19/2021 14:15:54.36 <Noti:ospf.neighbor.ChgState> Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = EX_START due to two way event.

pe2 original trigger of the area 0 path flap, then immediately afterwards this P2P issue arises:

2021-08-16 04:57:41 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = INIT due to one way event.

2021-08-16 04:56:53 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = EX_START due to two way event.

2021-08-16 04:49:25 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.5.1.4 ipa 10.0.0.33 to state = FULL due to Loading done.

2021-08-16 04:49:15 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.5.1.4 ipa 10.0.0.33 to state = LOADING due to exchange done event.

2021-08-16 04:49:15 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.5.1.4 ipa 10.0.0.33 to state = EXCHANGE due to negotiation done event.

2021-08-16 04:49:15 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.5.1.4 ipa 10.0.0.33 to state = EX_START due to two way event.

2021-08-16 04:49:15 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.5.1.4 ipa 10.0.0.33 to state = INIT due to hello received.

2021-08-16 04:49:15 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.5.1.4 ipa 0.0.0.0 to state = DOWN due to new neighbor.

2021-08-15 19:25:19 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.6.2.5 ipa 10.6.0.42 to state = INIT due to one way event.

2021-08-15 19:24:29 Notification (5) OSPF.NEIGHBOR Changing the state of neighbor rtid 10.5.1.4 ipa 10.0.0.33 to state = DOWN due to interface Down.

Any recommendations on what to check to resolve this permanently would be appreciated.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

08-26-2021 08:26 AM

Hello Euphix,

I recommend you to open a GTAC case. So you can have the behavior officially clarified. You have already prepared the data very well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

08-26-2021 08:26 AM

Hello Euphix,

I recommend you to open a GTAC case. So you can have the behavior officially clarified. You have already prepared the data very well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

08-26-2021 08:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

08-26-2021 08:16 AM

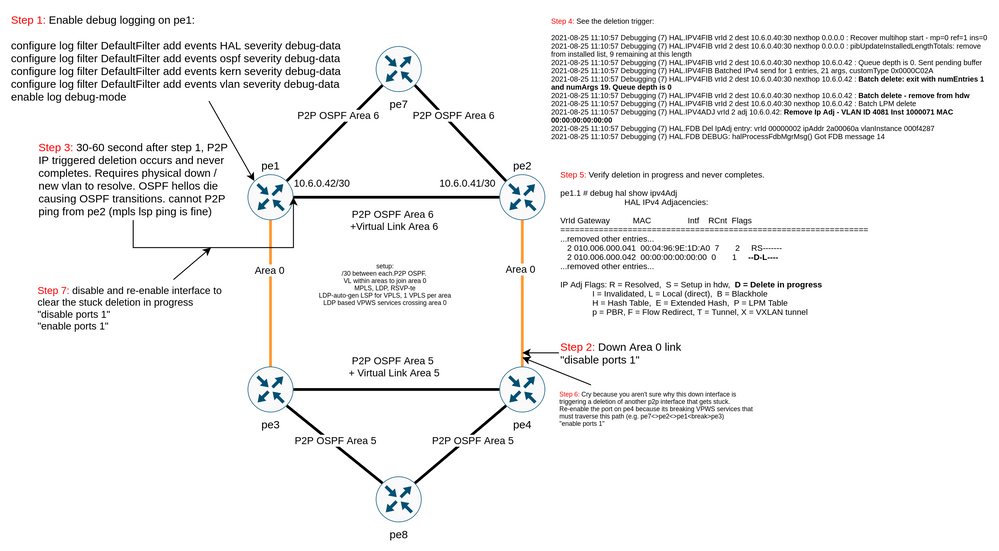

We can reproduce this now.

If some soul at extreme comes across this would be great to get an ack on this.

To reproduce this bug see picture or below:

4 device setup (x870-32c). MPLS,LDP,RSVP-te, active LDP-signalled VPLS (1 per area), VPWS services traversing area 0.

4 link setup.

2 devices in area 6 with P2P /30 OSPF between + Virtual link area 6.

2 devices in area 5 with P2P /30 OSPF between + Virtual link area 5.

Each device has 1 area 0 link with P2P /30 to the other area.

When experiencing a link down on an area 0 path, an ipv4Ajacency deletion is triggered on the far end P2P virtual link, but deletion never completes causing adjacency issues:

2021-08-25 11:10:57 Debugging (7) HAL.IPV4FIB vrId 2 dest 10.6.0.40:30 nexthop 0.0.0.0 : Recover multihop start - mp=0 ref=1 ins=0

2021-08-25 11:10:57 Debugging (7) HAL.IPV4FIB vrId 2 dest 10.6.0.40:30 nexthop 0.0.0.0 : pibUpdateInstalledLengthTotals: remove from installed list, 9 remaining at this length

2021-08-25 11:10:57 Debugging (7) HAL.IPV4FIB vrId 2 dest 10.6.0.40:30 nexthop 10.6.0.42 : Queue depth is 0. Sent pending buffer

2021-08-25 11:10:57 Debugging (7) HAL.IPV4FIB Batched IPv4 send for 1 entries, 21 args, customType 0x0000C02A

2021-08-25 11:10:57 Debugging (7) HAL.IPV4FIB vrId 2 dest 10.6.0.40:30 nexthop 10.6.0.42 : Batch delete: exit with numEntries 1 and numArgs 19. Queue depth is 0

2021-08-25 11:10:57 Debugging (7) HAL.IPV4FIB vrId 2 dest 10.6.0.40:30 nexthop 10.6.0.42 : Batch delete - remove from hdw

2021-08-25 11:10:57 Debugging (7) HAL.IPV4FIB vrId 2 dest 10.6.0.40:30 nexthop 10.6.0.42 : Batch LPM delete

2021-08-25 11:10:57 Debugging (7) HAL.IPV4ADJ vrId 2 adj 10.6.0.42: Remove Ip Adj - VLAN ID 4081 Inst 1000071 MAC 00:00:00:00:00:00

2021-08-25 11:10:57 Debugging (7) HAL.FDB Del IpAdj entry: vrId 00000002 ipAddr 2a00060a vlanInstance 000f4287

2021-08-25 11:10:57 Debugging (7) HAL.FDB DEBUG: halProcessFdbMgrMsg() Got FDB message 14

Verify stuck in delete state with: debug hal show ipv4Adj

pe1.1 # debug hal show ipv4Adj

HAL IPv4 Adjacencies:

VrId Gateway MAC Intf RCnt Flags

================================================================

...removed other entries...

2 010.006.000.041 00:04:96:9E:1D:A0 7 2 RS-------

2 010.006.000.042 00:00:00:00:00:00 0 1 --D-L----

...removed other entries...

IP Adj Flags: R = Resolved, S = Setup in hdw, D = Delete in progress

I = Invalidated, L = Local (direct), B = Blackhole

H = Hash Table, E = Extended Hash, P = LPM Table

p = PBR, F = Flow Redirect, T = Tunnel, X = VXLAN tunnel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

08-24-2021 07:40 AM

Still trying to isolate this bug. Any suggestions are appreciated.

We are thinking of ripping out OSPF and migrating to ISIS purely because of this issue…

:*(