This website uses cookies. By clicking Accept, you consent to the use of cookies. Click Here to learn more about how we use cookies.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Extreme Networks

- Community List

- Technical Discussions

- Network Architecture & Design

- Network Design Considerations for Summit X670-48t ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Network Design Considerations for Summit X670-48t (edge) and Summit X770 (core) Switches

Network Design Considerations for Summit X670-48t (edge) and Summit X770 (core) Switches

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-17-2016 12:55 AM

Hello,

I am faced with the challenge of validating a fairly large switching environment to support uncompressed audio and video streaming for an audio visual distribution system.

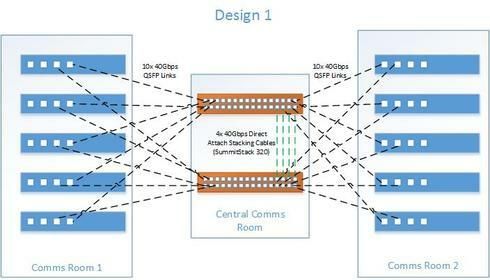

To summarise, I have 4 communications rooms which are required to house between 3 and 6 48 port 10Gbps switches. Each of these rooms must be connected to a central "core" switch stack to enable audio and video streams to be routed to any and all other switching locations. All attached endpoint devices shall be connected via 10Gbps SFP+ modules. I have provided simplified diagrams to attempt the explain the switch deployment options under consideration (Design 1 and Design 2).

I am looking to implement redundancy within the core switch stack, so that if a core switch fails, then connectivity between end points remains, albeit potentially with lower bandwidth.

As well as the above, I am looking to minimise the quantities of required QSFP modules and optic fiber runs where possible. The following diagrams are simplified showing:

Each edge node switch has 2x 10Gbps SFP connections going to each of the X770 core switches

Design 2:

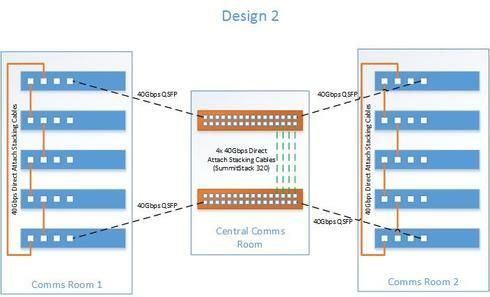

Each communications room "group" of switches is stacked using the rear 4x 40Gbps port VIM modules. A pair of QSFP links attached to the top and bottom switches and then connected to the X770 core switches.

The final layout will feature 2 additional switch groups (not shown).

I am looking for some feedback and advice on the presented design options. In my view, Design 2 is the most efficient design that uses significantly fewer QSFP modules and fibre runs. Perhaps Design 2 should feature an additional pair of QSFP links (to provide 4x 40Gbps between the comms room switch stack and the core switch stack)?

Any comments would be most appreciated.

Thanks,

Benjamin

I am faced with the challenge of validating a fairly large switching environment to support uncompressed audio and video streaming for an audio visual distribution system.

To summarise, I have 4 communications rooms which are required to house between 3 and 6 48 port 10Gbps switches. Each of these rooms must be connected to a central "core" switch stack to enable audio and video streams to be routed to any and all other switching locations. All attached endpoint devices shall be connected via 10Gbps SFP+ modules. I have provided simplified diagrams to attempt the explain the switch deployment options under consideration (Design 1 and Design 2).

I am looking to implement redundancy within the core switch stack, so that if a core switch fails, then connectivity between end points remains, albeit potentially with lower bandwidth.

As well as the above, I am looking to minimise the quantities of required QSFP modules and optic fiber runs where possible. The following diagrams are simplified showing:

- Two communications rooms each allocated with 5x Summit X670-48t switches, each fitted with 4x QSFP VIM modules, and:

- The central communications room allocated with 2x Summit X770 32 port QSFP switches, using direct attach cables to achieve a SummitStack 320 (single logical core switch).

Each edge node switch has 2x 10Gbps SFP connections going to each of the X770 core switches

Design 2:

Each communications room "group" of switches is stacked using the rear 4x 40Gbps port VIM modules. A pair of QSFP links attached to the top and bottom switches and then connected to the X770 core switches.

The final layout will feature 2 additional switch groups (not shown).

I am looking for some feedback and advice on the presented design options. In my view, Design 2 is the most efficient design that uses significantly fewer QSFP modules and fibre runs. Perhaps Design 2 should feature an additional pair of QSFP links (to provide 4x 40Gbps between the comms room switch stack and the core switch stack)?

Any comments would be most appreciated.

Thanks,

Benjamin

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

11-17-2016 01:16 AM

Hi Benjamin, I have done with that but with slightly difference. Since I have so much 40GbE ports and mostly traffic will be the fan in traffic to core switches, I'd rather to have bonded all 40GbE links from each edge switch to both core switches. This way will solve redundancy and capacity issues. Of course, you will spend more 40GbE transceiver s and accessories, but I believe it is comparable to the benefits. Beside you will have extra management complexity due to handling of each edge switch separately. Anyway, for simpler manageability you can sacrifice two 40GbE ports of edge switches to make stacking of maximum four switches if you still want to have all of rest 40GbE ports bonded to the core switches due to the limitation of shared ports member of eight (CMIIW). Best regards,