- Extreme Networks

- Community List

- Switching & Routing

- ExtremeSwitching (EXOS/Switch Engine)

- Re: Strange connectivity issues after VRRP change

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Strange connectivity issues after VRRP change

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

12-11-2023 03:08 PM - edited 12-11-2023 03:18 PM

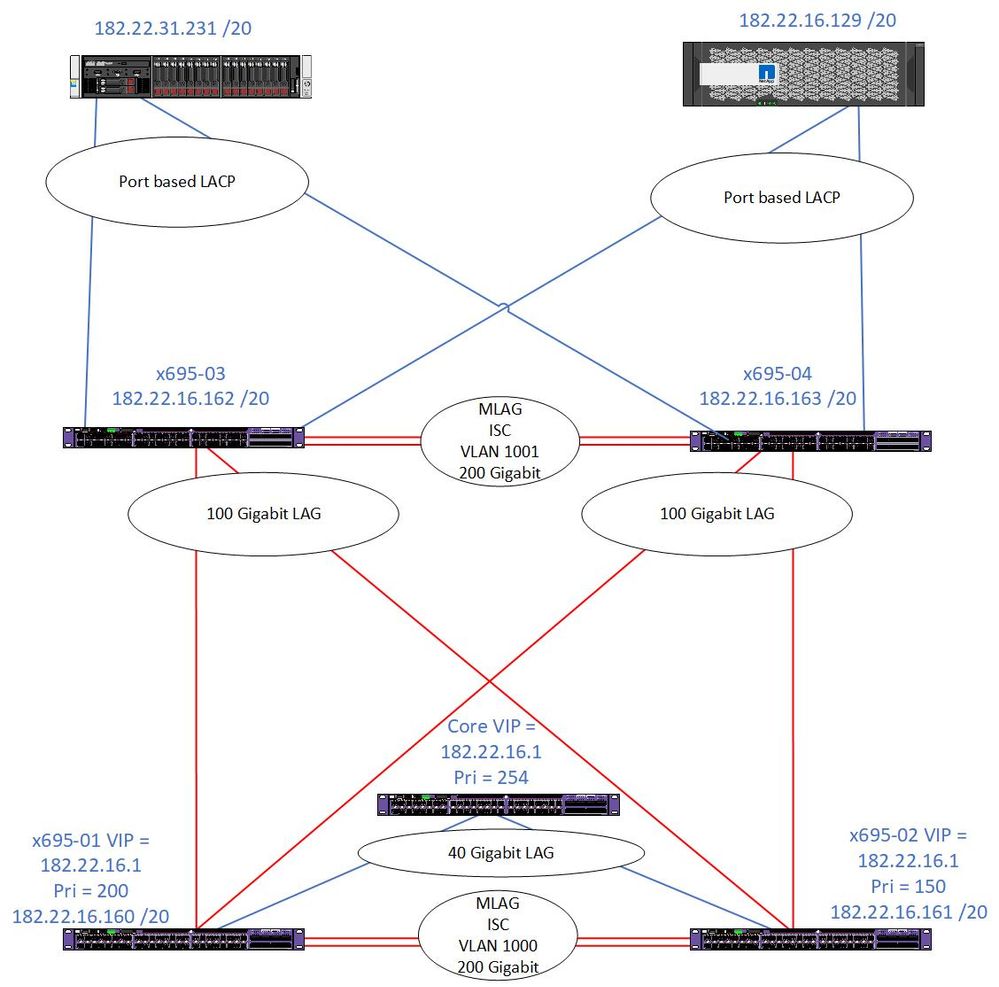

On 12/2, we disabled VRRP on our old Core switch (shown with a priority of 254 below) and disconnected it from the -01 and -02 switches. The -01 switch took over the master role as expected with the -02 switch being backup. However, we have been having connectivity issues to our remote site as well as within the same site, even within the same VLAN and the strangest thing is that it is only physical servers (three different distros of Linux) that are impacted, not any VMs regardless of the VLAN they are in. Focusing on the 182.22.16.x /20 VLAN, we have one server .231 that will occasionally lock up with a "util-srv-04 kernel: nfs: server netapp-06-int not responding, still trying" error (the NetApp in the error is the .129 server in the diagram). The NetApp shows no errors in the logs, the switches don't show any errors in the logs.

We don't run VRRP on the 03/04 switches, but the servers in question do point to the VRRP VIP of 182.22.16.1 as their gateway which is on the 01/02 switches.

On the 01/02 switches, our VRRP setups are fairly simple:

-01

create vrrp vlan Internal_Appliances vrid 16

configure vrrp vlan Internal_Appliances vrid 16 priority 200

configure vrrp vlan Internal_Appliances vrid 16 accept-mode on

create vrrp vlan Routable vrid 3

enable vrrp vlan Internal_Appliances vrid 16

-02

create vrrp vlan Internal_Appliances vrid 16

configure vrrp vlan Internal_Appliances vrid 16 priority 150

configure vrrp vlan Internal_Appliances vrid 16 accept-mode on

create vrrp vlan Routable vrid 3

enable vrrp vlan Internal_Appliances vrid 16

The 03/04 switches have an IP assigned to just the one VLAN in question so that we can connect to it via management and IP Forwarding is enabled on that VLAN (I think we can disable this since there aren't any other VLANs on the switch with an IP to forward to). The 01/02 switches have more VLANs with IP addresses on them and forwarding is enabled on those VLANs.

Does anyone have any thoughts on the following?

- Why would removing the old Core (a x670) and then having two x695s take over as master/backup suddenly create issues like this?

- Why would we only be seeing the issue with physical servers instead of physical and virtual servers?

- Should we switch to a Master/Master VRRP setup?

- Do "vrrp vlan tracking", "vrrp route table tracking", or "vrrp ping table tracking" need to be configured to try and resolve the situation? If so, why do we need those configured now when things were working with the old core switch in place?

- Should "accept-mode" be enabled on the master and the backup?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

01-02-2024 05:40 AM

Hi Stephen,

I know my reply is late and you probably figured it out on your own by now, but maybe it's helpful for other people in the future.

You are definetily on the right track! See https://extreme-networks.my.site.com/ExtrArticleDetail?n=000001777

"Enabling jumbo-frames support on the ports only enables jumbo frame support for L2 switching. If jumbo-frames need to be routed you need to change the IP MTU for the VLANs that will route these.

This needs to be adjusted on every VLAN that will route the jumbo-frames (ingress and egress).

In EXOS, the default MTU size after enabling jumbo-frames is 9216."

So you only have to activate it on routed VLANs. Maybe this was the case on the old switches?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

12-13-2023 07:33 AM - edited 12-13-2023 12:15 PM

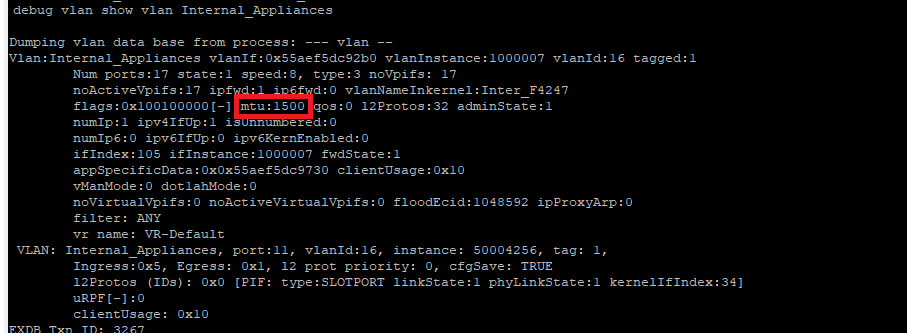

I think I found the problem. The physical servers have their MTU set to 9000 while the virtual servers have their MTU as 1500. We saw packet fragmentation and when we dropped the MTU on the physical servers to 1500, the problems went away. We have jumbo frames enabled on all ports already, but it sounds like we need to run "configure ip-mtu 9194 vlan <vlan_name>" on all VLANs with an IP based on https://extreme-networks.my.site.com/ExtrArticleDetail?n=000001777 and https://documentation.extremenetworks.com/exos_commands_30.7/GUID-310E818D-0151-4933-8112-D67C3841AF...? Should we change that setting on all VLANs or just ones that have an IP assigned and IP Forwarding enabled? Are there any risks to connectivity with this change since we already have Jumbo frames enabled? I still don't understand why we didn't see this issue with the old Core switch (x670-G2 running XOS 16.2.5.4) while we now have x695s running 31.7.1.4.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

01-02-2024 05:40 AM

Hi Stephen,

I know my reply is late and you probably figured it out on your own by now, but maybe it's helpful for other people in the future.

You are definetily on the right track! See https://extreme-networks.my.site.com/ExtrArticleDetail?n=000001777

"Enabling jumbo-frames support on the ports only enables jumbo frame support for L2 switching. If jumbo-frames need to be routed you need to change the IP MTU for the VLANs that will route these.

This needs to be adjusted on every VLAN that will route the jumbo-frames (ingress and egress).

In EXOS, the default MTU size after enabling jumbo-frames is 9216."

So you only have to activate it on routed VLANs. Maybe this was the case on the old switches?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

01-03-2024 06:17 AM

We changed the MTU setting on the VLANs and that did resolve the issue. With that said, I checked the old switch and the MTU setting was not changed there so I have no idea how it was working without issue for years.